A Comprehensive History of CI/CD

Continuous integration and delivery have transformed software development. Soon, AI/ML will shape the future of CI/CD for smarter, more dynamic workflows.

TABLE OF CONTENTS

From Concept to Standard: The Emergence of CI/CD Pipelines in Modern Development

In a previous blog post series, we delved into unit testing, including the groundbreaking but underutilized Extreme Programming (XP) practice known as test-driven development (TDD). With its innovative approach, XP has paved the way for many of today's software development practices, some of which have gained more traction than others.

One engineering practice from the original list of XP practices is responsible for some of the most critical changes in software development in the last 25 years. That practice is continuous integration, which is the CI in the CI/CD pipeline.

Origins of Continuous Integration (the CI in CI/CD)

In his book Object-Oriented Analysis and Design with Applications, Grady Booch first used continuous integration. However, its popularity and modern understanding come from the Extreme Programming community. Kent Beck and Ron Jeffries provided the first definition of continuous integration. In his book Extreme Programming Installed, Jeffries said: Integration is a bear. We can’t put it off forever. Let’s do it all the time instead.

The XP community introduced another practice called the “Ten-minute build.” This practice emphasizes the need for a quick build process, suggesting that development teams require a ten-minute or shorter build if they want to integrate and test the entire system many times daily.

Meeting the ten-minute integration and testing goal required rapid testing, achievable only through unit tests. Unit tests are small, targeted tests that check individual components of the software. The concept of writing these unit tests before the code, known as test-driven development (TDD), was instrumental in ensuring thorough testing of the entire system within the ten-minute window.

Early Challenges of Continuous Integration (the CI in CI/CD)

In the early days of continuous integration, building, deploying, and testing by running unit tests was challenging. A significant hurdle was the time it took to build the system. Jeffries and his co-authors once encountered a team whose system took a week to build, highlighting the early obstacles faced in implementing continuous integration.

In my first job in the early 90s, our product programming languages were C and C++. Every time we built, we went for a coffee break. We will have enough time to prepare the coffee and wait until it is ready. Compiling the product alone, forget about deploying and testing, took way more than 10 minutes.

Fast builds are much more accessible today as we break products into services (microservices) and treat them as independent systems. Computing power has considerably improved since the late 1990s. In other words, newer architecture patterns emerged, and the underlying hardware kept improving.

The second challenge was tooling. While Kent Beck and Ron Jeffries introduced continuous integration in the late 90s, the first CI server did not appear in the marketplace until 2001. The introduction of CruiseControl provided the first opportunity for early adopters of agile software development to implement continuous integration (CI).

Still, the early days of continuous integration (CI) were challenging. CI requires compiling, inspecting, deploying, and testing the product quickly. If you worked at a company with a product portfolio, your system included many different products working together.

At Ultimate Software, we had a portfolio of products covering human resources, payroll, benefits, recruitment, onboarding, performance management, time management, and a few others. Many of these products had complicated deployment mechanisms. We created a build and deployment team. The team was responsible for bringing everything together and developing tools to deploy all these products in a SaaS environment.

The third challenge that many teams faced was maintaining the integrity of their tests. In the early days, it was common to find teams with broken tests in their CI system (which eventually evolved into a CI/CD pipeline). It took time for many software development teams to instill the cultural aspect of treating failing tests as the top priority.

From CI Server to CI Pipeline

The final challenge was dealing with the legacy of many years of waterfall development. Most teams did not have unit tests. If they had any test automation, it was GUI-based test automation. At Ultimate Software, while we made progress in getting engineers to write unit tests, the most successful approach was focusing on integration tests and bringing those into our CI system, in essence, building a pipeline.

Our first pipelines at Ultimate Software started with the faster tests (unit tests) and then moved to the slower tests. Introducing Fitnesse allowed us to implement Acceptance Test Driven Development (ATDD) and reduce our dependency on GUI-based test automation. Many teams could execute all their automated tests at least once per day, but not all.

Despite the challenges, we were constantly breaking dependencies all over the place—in the codebase, our tests, our processes, and even our organization. It was hard and painful work, but we kept progressing and reducing our cycle time. We saw significant improvements in our overall testing time and a noticeable increase in releases yearly.

We were a classic case of the ice cream cone anti-pattern; see the image below to understand what I mean:

We had manual tests and GUI-based test automation (end-to-end tests in the diagram above). We introduce unit testing and ATDD to build up the bottom layers of the ice cream cone. For many years, we continue to have an ice cream cone. We had to wait until we started developing new products to be able to implement a model like the testing pyramid.

At the same time, we started building cloud-native applications. The first steps to implement and define continuous delivery (CD) and deployment (CD) occurred in the industry.

Lean + CI = CI/CD Pipeline

The influence of Lean had a significant impact on software development. One example is the use of Poka-yoke in the emergence of continuous delivery. According to Wikipedia, Poka-yoke is a Japanese term that refers to “mistake-proofing” or “error prevention.” A deployment pipeline is a set of validations through which a piece of software must pass on its way to release.

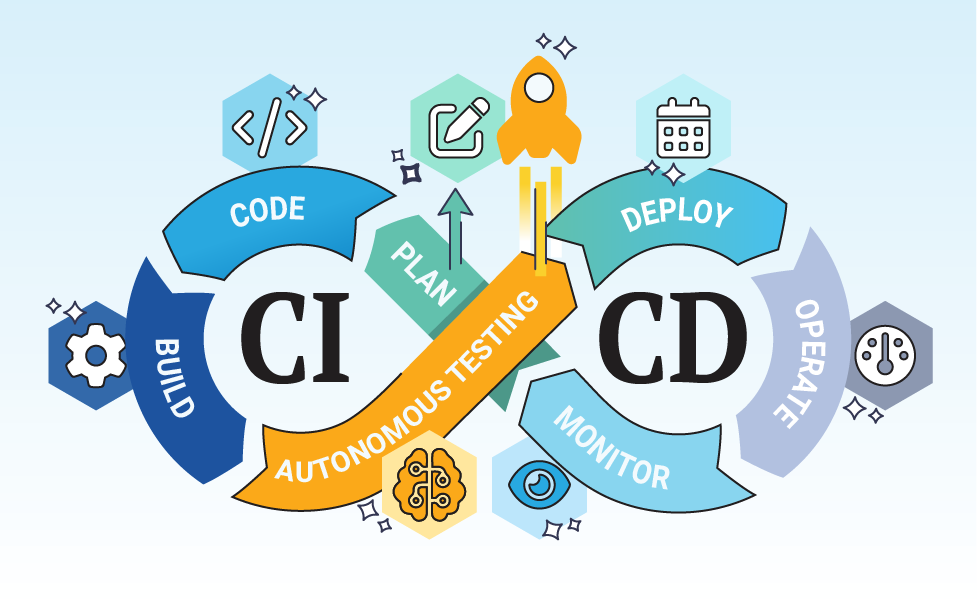

The diagram below shows a CI/CD pipeline:

Source: https://blog.openreplay.com/what-is-a-ci-cd-pipeline/

When a developer commits a code change, it triggers actions and validations. If necessary, the code is compiled and packaged by a build server. The system deploys the new package to a test environment, where tests are executed. You always want your faster tests to run first. That helps the team get quicker feedback about the impact of the new code change on the product.

If the first validation passes, you move on to the next one. Usually, that means integration tests. These days, those are BDD tests. In continuous delivery (CD), the new package will continue moving through the pipeline until it is releasable.

The Two CDs in the CI/CD Pipeline

You may notice two terms: Continuous Delivery (CD) and Continuous Deployment (CD). Both are the “CD” in the CI/CD pipeline and evolutions of continuous integration (CI). Some people suggest Continuous Deployment is the goal, but I disagree. Continuous deployment and delivery serve different needs and fit better in specific contexts than others.

According to Amazon Web Services (AWS), continuous delivery is a software development practice where code changes are automatically prepared for a release to production. According to IBM, continuous deployment is a strategy in software development where code changes to an application are released automatically into the production environment.

In essence, a CI/CD pipeline, where CD means continuous deployment, is a fully automated pipeline that automatically deploys a new package to production after it successfully passes all the validations in the CI/CD pipeline.

A CI/CD pipeline, where CD means continuous delivery, is primarily automated but requires at least one manual step before deploying the new package to production.

In my experience, you find continuous deployment in B2C applications. One of the first examples was a cloud-based game. Today, companies like Netflix, Amazon (retail website), Google, Etsy, and Spotify use continuous deployment. You may notice a pattern: none of these systems are mission-critical. I am sure some Swifties believe being unable to listen to Taylor on Spotify might end their lives, but we know that is not true.

Companies working on Enterprise B2B applications tend to have higher compliance requirements. If your market is the US federal government, you likely need continuous delivery instead of continuous deployment. If your product focuses on verticals like healthcare, consider continuous delivery over continuous deployment. In some instances, deployment to production is only allowed if a human manually completes a suite of acceptance tests. In that case, you have no choice but to go with continuous delivery.

The critical difference between continuous delivery and continuous deployment is the specific quality standard you must follow. In other words, how much and what kind of testing do you need in your CI/CD pipeline?

To answer these questions, I always go back to Marick’s test matrix:

The critical difference between these two depends mainly on what you must cover in quadrants 3 and 4. For example, if you do not need a lot of performance testing and can manage it using your Application Performance Monitoring (APM) tool, then you can probably use continuous deployment. You can push most performance testing to the right and monitor your production environment. In the end, it will come down to your definition of quality. How much coverage do you need to have? Are there any compliance frameworks you must follow?

Your context will guide you in deciding whether to target continuous delivery vs continuous deployment. A basic test is: If your product fails, Will it cause physical harm or damage critical infrastructure? If your product is a GPS service used by shipping containers or oil tankers, you want to focus on safety. If you are Netflix, continuous deployment is just fine.

From CI/CD pipelines to DevOps

We move from continuous integration (CI) to continuous delivery and deployment (CD) and then to the next phase, DevOps.

CI/CD pipelines are a cornerstone of DevOps. It forces development and operations teams to break the walls between them and work together.

In the past, I have said that for Lean practitioners like myself, DevOps was an obvious evolution of Agile. The reason for this is the history of Lean. DevOps reminds me of the creation of just-in-time at Toyota (the birthplace of Lean).

Toyota discovered they had become very efficient at their production lines thanks to Lean thinking, but the rest of their supply process could have been more efficient. They realized they had to work with their suppliers and distributors to help them embrace continuous improvement and work in small batches so they could adapt to changes quickly. A similar story explains DevOps. Software development teams became more efficient using practices like continuous integration, but they had to deal with operations teams still working in big batches.

The Future of CI/CD Pipelines

If you read the State of DevOps Report 2023, you will think half of the development teams have working CI/CD pipelines. I am skeptical that is the case. Even the 18% of respondents who meet the DORA metrics at the Elite performance level seem high. In theory, that is the percentage of companies using continuous deployment as they can release on-demand code, deploy a new change in less than a day, and recover from a failed deployment in less than an hour.

The strongest signal of bias in the respondents' population is that the low performance level (17%) percentage is lower than the elite level. Still, the data validate that CI/CD pipelines are an established and widely used software development practice.

The immediate future of CI/CD pipelines is the integration and support of AI/ML solutions. At Testaify, we designed our product with an open API, which makes it easy to add to your CI/CD pipeline. Our product enhances your CI/CD pipeline by getting more testing done sooner rather than later. You can now execute the most valuable functional tests, system-level tests, within your CI/CD pipeline and rethink your whole testing strategy.

Regarding CI/CD pipelines and AI/ML, the opportunity now exists to make them more dynamic and smarter. Today, these pipelines are linear. Adding AI/ML allows them to become more dynamic by discovering the software development team patterns. Are most defects stopping the pipeline showing with integration tests instead of unit tests? Should the CI/CD pipeline dynamically change the order of execution to accommodate this pattern?

Introducing AI/ML provides new opportunities to improve many aspects of software development, including CI/CD pipelines.

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Testaify is in managed roll-out. Request more information to see when you can bring Testaify into your testing process.