The Changing Landscape of Integration Testing

One of the most intriguing developments in software testing is the growing significance of integration testing. This shift can be attributed mainly to the rise of cloud computing and API architectures, making integration testing an indispensable part of any software testing strategy. This was not always the case.

TABLE OF CONTENTS

From Theory to Practice: Achieving a Clear Definition of Integration Testing

Before we discuss the specifics of integration testing evolution, we need to resolve the issue of a clear definition. I am afraid it is not as simple as you might think.

In our blog post about unit testing, we talked about integration testing by quoting the following section from Louise Tamres’s book “Introducing Software Testing”:

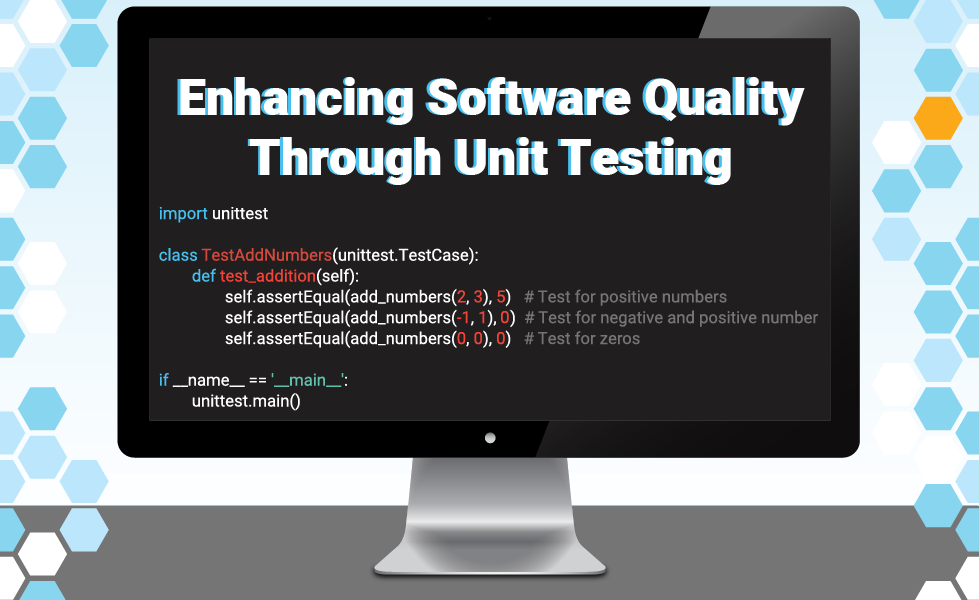

- Unit level testing concerns the testing of the smallest compilation unit of some application. The goal of unit testing is to demonstrate that each individual unit functions as intended prior to integrating it with other units. The tester typically invokes the unit and enters data via a test driver that sends the test execution information to the unit-under-test.

- Integration level testing consists of combining the individual units and ensuring that they function together correctly.

- System level testing applies to the entire application as a whole. This is often how the end user views of the system. The tester typically interacts with the application by entering data the same way as would the end user.

Looking carefully at this definition, you can see that integration testing is a level between unit and system testing. That creates problems as you must clearly define two boundaries to explain integration testing. The first boundary with unit testing is that integration testing must include more than one unit.

Getting Closer to the Integration Test Definition

According to Wikipedia, a unit is defined as a single behavior exhibited by the system under test (SUT), usually corresponding to a requirement. That definition is problematic due to the use of the word “requirement.” In theory, a unit could be huge in this context. Let's see if we can find a more precise definition. It is correct that you write a software unit intending to implement some specific requirements, but it is not likely that the unit alone will cover the whole requirement.

Here is one from a Synopsys blog post: A unit is a small testable software component. Ok, that is short and emphasizes small units, but now we need to ask: what is a component?

Here is another definition: Software unit = an atomic-level software component of the software architecture that can be subjected to stand-alone testing.

Ok, that is a little better, as we can narrow it to stand-alone testing. In the end, the best definition I have found is the one from Michael Feathers in his excellent book “Working Effectively with Legacy Code.” It is not a unit definition but a definition of a unit test. Here is what he says:

Unit tests run fast. If they don’t run fast, they aren’t unit tests.

Other kinds of tests often masquerade as unit tests. A test is not a unit test if:

- It talks to a database.

- It communicates across a network.

- It touches the file system.

- You have to do special things to your environment (such as editing configuration files) to run it.

In other words, it must run fast and be completely isolated from the infrastructure components, configuration files, and other unit tests. Unit tests test a class (object-oriented programming languages) or a function (functional programming languages). By the way, when Feathers says fast, he means 100 ms or less.

Based on this definition, an integration test includes at least one unit that talks to another. It can depend on infrastructure components and might require system reconfiguration. Binder’s definition of an integration test in his book “Testing Object-Oriented Systems” has an essential element: The units included are physically dependent or must cooperate to meet some requirement. I would like to expand and suggest that the integration test will validate an explicit requirement.

So, integration tests include more than one software unit and other infrastructure components associated with explicit requirements. Associating integration tests with an explicit requirement will help narrow the scope.

That more or less resolves the boundary with unit testing. What about the boundary with system testing? That is not as difficult. System testing must include all units, infrastructure components, and configuration changes required for the application to be used by its intended users.

Maybe it is a little more complicated. In the case of a modern cloud API-based application, is a test interacting with the API, not the UI, an integration test, or a system test? It depends. If the product has a productized API exposed to users, your API test can be a system test. You have an integration test if the API is internal and unavailable to users.

In other words, what constitutes the system will depend on the combined perspective of all its users. This definition is still challenging because an integration test scope could be tiny, dealing with only a couple of units, or massive, dealing with most of the system except for one unit.

Integration Testing Challenges

Early in my career, the focus was on system testing. I am describing the software development context many years before agile development. Very few engineers did any unit testing. Some integration testing happened, but it was limited. There are several reasons for that:

- Limited tools: Before XP and the first xUnit testing framework (the first one was for Java), unit testing depended on engineers writing their tools to help them or handcrafted their unit tests. Most companies did not understand unit testing, so no investment was made in this area. It is even worse in the case of integration testing because of the complexity of setting up an environment with multiple dependencies but excluding some of the components.

- Cultural bias against engineers' testing: When QA departments controlled the process, certain QA team members considered the idea of engineers conducting any testing laughable. Executives thought engineers conducting testing was a waste of money; some still do. They wanted software engineers to write feature code.

- Lack of knowledge regarding unit and integration testing: As you probably noticed, the software testing community is not very good at generating consistent definitions. As such, we have a mess out there. People conduct a story test through the UI, which they call unit testing. Famous software testing books tell you that a unit test can include a cluster of classes. It cannot. Sorry, Binder, but that is incorrect.

- Integration testing is too broad: As I mentioned earlier, the definition of integration testing is expansive. It can be as small as testing two units together or as big as testing the whole system without one component. That ocean can overwhelm people. How do I come up with an integration test? It is essential to narrow the scope, as the guidance available is limited to what you can find in certain software testing books.

- Confusion about who is responsible for integration testing: Integration testing was in limbo because it exists between unit testing (usually the responsibility of the software engineers) and system testing (usually the responsibility of the QA team). In essence, integration testing was an orphan in many situations. Our silo waterfall mentality made integration testing the forgotten level in many organizations. To perform integration testing, you required tools that did not exist, and only the engineers could help you. Plus, it requires explicit requirements to test, but QA was already conducting system testing with those requirements. Why set up an integration test environment when you already have your system test environment? Why validate the requirements through integration tests when I am doing it through system testing?

Agile and the Increased Importance of Integration Testing

As stated in Cem Kaner, Jack Falk, and Hung Quoc Nguyen's book “Testing Computer Software,” integration testing is a form of incremental testing. Incremental testing makes it easier to pinpoint a defect's root cause. When you test one service, the error is in that service or the tool you use to test the service. The whole system is involved in a system test, which can significantly delay finding the root cause of a defect. In other words, the fundamental advantage of integration testing is that it reduces the cycle time to resolve a defect.

Agile testing recognized this reality early on and developed new models to help development teams organize their testing by level. The first one was the agile testing pyramid. The pyramid looks like this:

The agile testing pyramid calls for most testing to happen at the unit level. It suggests that most of your tests should be isolated unit tests, as they run faster. In this version of the pyramid, unit tests are followed by service tests (integration tests), and finally, the smallest number of tests should be UI tests (system tests).

Other models have emerged challenging these recommendations. The most popular alternative to the testing pyramid is the testing trophy. It looks like this:

In this model, the recommendation is to have more integration tests than unit and system tests. Both models inverted the industry reality for a long time: most automated tests were system tests. One of the main reasons many moved to the trophy model is the evolution of test-driven development (test-first).

Bringing Customer Feedback Back into the Picture

Because Agile tries to limit the documentation regarding requirements, we focus on collaboration with our customers instead. We need something to take its place. Terms like executable specification became popular. The evolution looks something like this:

Extreme Programming (XP) introduced the world to test-driven development. TDD drives development through tests, but those tests are for units. In other words, you get unit testing as a side benefit of TDD. TDD is well known in the industry, but even today, a minority of developers use it. Still, TDD brought momentum that generated many software development tools and techniques to help with unit testing, such as unit testing frameworks, mocking frameworks, stubs, etc. The ecosystem for unit testing is so large now that every significant programming language in the market has at least one unit testing framework available. The most popular programming languages have multiple unit testing frameworks in the market.

However, TDD was insufficient and did not help much with customer collaboration. XP teams started using customer tests, which evolved into Acceptance test-driven development (ATDD). Because acceptance testing is about a set of tests a customer has created or approved, in theory, it provides clear acceptance criteria to say something is done and ready for release.

Ward Cunningham, one of the original signatories of the Agile Manifesto, proposed Fit (Framework for integrated test) to make the acceptance criteria executable. Using Fit, you can capture requirements as integrated tests. Fit uses tables called fixtures that allow you to validate customer expectations in an automated fashion.

The name tells you everything about the importance of integration testing. At the middle level, integration testing allows you to communicate more effectively with customers than unit tests. It is at this level that you can define explicit requirements.

Still, Fit is cumbersome and not as easy to use. Later on, we got Behavior-Driven Development (BDD). We can write our acceptance tests using English instead of some weird table. The following is an example of a BDD scenario:

Given I have a current account with $1,000.00

And I have a savings account with $2,000.00

When I transfer $500.00 from my current account to my savings account

Then I should have $500.00 in my current account

And I should have $2,500.00 in my savings account

Still, even BDD is used by a minority of software development teams. I talked about it here. Also, some teams use BDD, specifically the Gherkin language, to drive their UI web tests with Selenium. That is not BDD.

Together with frameworks like BDD, we have the evolution of software applications. Today, most applications are cloud-based solutions that use an API architecture. In other words, modern cloud applications have a service layer perfect for integration testing.

Finally, thanks to BDD, frameworks are available to help us automate the BDD scenarios. As such, the importance of integration testing is significantly higher now than three decades ago.

Requirements and Integration Testing

Thanks to agile processes like BDD, requirements, and integration testing are intermingled today. They coexist on those BDD scenarios and the code that allows them to become executable specifications.

Having explicit requirements tied to your integration testing also helps testing in other ways. As mentioned before, integration testing is an ocean of possibilities. We need to narrow that scope. The best way is to start with integration tests directly linked to explicit requirements. If those failed, you can then use your unit tests to see if you can find the problem. If that does not work, you can build a specific integration test that reduces the number of components involved. This approach will help you isolate the root cause. There is no need to add other integration tests unless they are needed to identify and validate a specific defect.

Start with integration tests tied to specific requirements and only add more if needed to help you with particular bugs in the system.

What is the future of integration testing? To answer that, we need to examine AI/ML and its interaction with integration testing.

AI/ML and Integration Testing

As we mentioned before, the move to the cloud and the adoption of API architectures greatly impacted the importance of integration testing. It also enables using AI/ML tools to enhance integration testing.

Thanks to the spread of specific API architectures like REST, it is possible to discover, generate, and execute test cases against API endpoints. Implementing AI/ML API Testing is simpler than AI/ML system testing. Many tools do exist that help you with API test generation and execution. At Testaify, we plan to offer the capability to test your API using AI/ML.

Having AI/ML offerings at each of the three levels—unit, integration, and system—is essential to achieving a comprehensive software testing strategy. It allows us to significantly reduce the impact of the fundamental challenge in software testing: the large number of combinations to test.

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Join the waitlist to be among the first to know when you can bring Testaify into your testing process.